Following the Unity RPG Core Combat Creator: Learn Intermediate C# Coding and getting to the Raycasting For Component part, I have already

Due to the nature of how my game’s world is being generated and how I’m optimizing what’s visible and what’s enabled in the physics engine, I have already, previously, implemented a layering system that allows me to set a specific layer for ray castings depending on what I’m looking for.

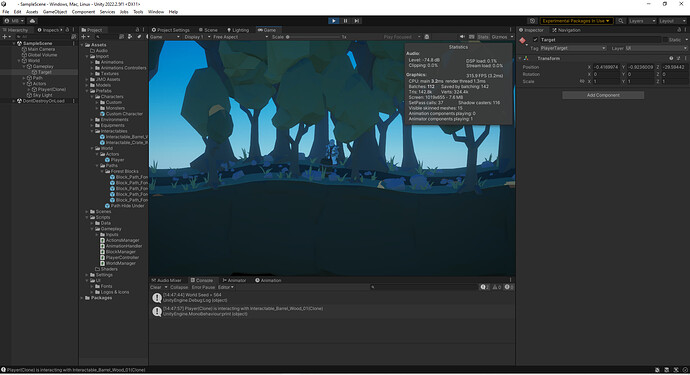

This is because, unlike in this course which uses the terrain as the main “target” for its movement which is viewed from the sky, I’m generating a dynamic level from a side view to create a metroidvanilla experience. In my case, half of the screen is just emptiness so I had to implement a way for ray casts to work even in that empty space as I’m working on controls to work with touchscreen (or pen), controllers and Mouse & Keyboard. (You can control the character either with direct controls which is like a metroidvanilla or with click/press a target/area and auto-moving to the it.)

I’m using a similar approach to level generation as the MMORPG Warframe, but on a difference (smaller most of the time) scale. The world is made out of a series of full or partially predetermined blocks. For regular path-base blocks, each block is about the size of 5 cubic meters (in-game units). I can generate, whenever needed/required, larger blocks for specific areas like a building or just change the biome between a forest, a town, a mine, a volcano, etc. I made the map generating system to also allow various length going from a small 10 blocks (50m) for something like a boss arena to as much as 1500 blocks (7.5km) which, at ~4.5m/s, takes about 30 minutes of real time to cross, not including any combat, inventory/stats management, etc. along the way. (On a potato laptop PC, I was able to get decent load time under 3 sec even with 3000 blocks, but as I want to allow cross-platform coop even on average mobiles, so I’m currently setting the max length at 1500 for performances on the load time.)

I’m using a particle system to generate most of the decorative elements on each block like trees, grass, rocks, etc.(which are located on the foreground and background).

As such, I use a different approach when it comes to target selection by using Unity’s Physics layers.

This allows me to reduce the number of objects detected by any ray casting to what I truly need to look for, with a Layer Mask on my ray casts, and also determine what’s to priority by simply checking the layer of all selected objects that pass the Layer Mask inspection.

For example, I got the layers set as “6:Ground”, “7:Zone”, “8:Players”, “9:Hostiles”, “10:Environment”, “11:Allies”, “12:Interactable” and “13:Body Parts”.

If the player is clicking regularly, the ray cast only check for layers 7, 9 and 12.

Then, the controller script goes through each potential target and cache the prioritized target transform going from, first, anything on layer 9, then 12 and finally if there’s only something on layer 7 (which is a box trigger collider that covering each block in the world), I’m getting the position value on the ray cast point and zeroing it onto the height and depth of the block’s.

If this ray cast return no hit, the player NavAgent destination is just not updated.

If the player is clicking while readying an healing spell (upcoming feature), the ray cast is looking for layers 8, 11 and 9. It looks up, first, for players (for prioritizing self-heal or another player’s heal), then allies (NPCs such as summons or just temporary NPC allies) and then, if none of the target are on layer 8 or 11, I’m looking through the layer 9 (hostiles) and if any enemies can be damaged by such spell (like an undead), then damage are dealt to the hostile target.

The cool thing about using layers is that it’s a semi-permanent reference (an integer) that can be easily read and used if you cache the Transform value of the target. Just by sharing the Transform value with the various modules through the layers of scripts (whenever needed) from either the PlayerController (or whatever AI script we’ll make later), you can then just look up its layer whenever needed to know what kind of target it is and then, properly get/caching/update the kind of component needed by each module.

For example, a target being an enemy might have a different set of components/scripts than a door or lever or chest. Instead of looking up what’s what through getting specific components, I’m already factoring the the kind as early as during the ray casting and avoid putting any process time on getting components that aren’t related to the active target. (One good example is when I’ll implement the outline on the target. Outlines are only generated on, Players, Allies Enemies and Interactables which means that by looking up the layer, I can know if I got to check for the proper component to set an outline or not as well as if I got to remove a previous outline or not right at the moment where the target is selected and, if applicable, an old target is not selected anymore.)

I’m basically killing 3 birds with 1 stone with that system.

For those who wonder why layers for Ground, Environment and Body Part here’s their uses:

Layer 6 (Ground) is the layer solely used by the NavMeshSurface component when I’m generating the Nav Mesh Data once the world is generated. By doing so, I’m lightening the processing load of the dynamic level generation by a ton and it also allows me to purposely set pre-determined available paths width and directions instead of letting the NavMeshSurface component to do it blindly.

Layer 10 (Environment) is almost just a render-only layer. I’m using it to define elements in the foreground and background which has little to no interaction involved. It’s good practice to implement a layer for such things in case some of the interacting stuff you generate live ends up on the Default layer.

Layer 13 (Body Parts) is a layer only for the Physics engine when it will comes to hit registration and targeting detection. It’s being used for stuff like body part selection on a boss with multiple selectable parts (think of the classic of an Hydra monster with 3 heads that can be selected and destroyed individually). It’s also being used when something like a physic-based attack (like arrows or ranged directional spells) hit from a direction in certain circumstances. The effect of an arrow or ranged directional spell may look different if it hits a shield and I might have to get the exact position where it hits it for the after-hit effect (like an arrow temporary stuck in it or a fireball spell that explode backward from the shield direction or a laser that is reflected out of the way).

I just wanted to share my own approach to the raycasting in my project.