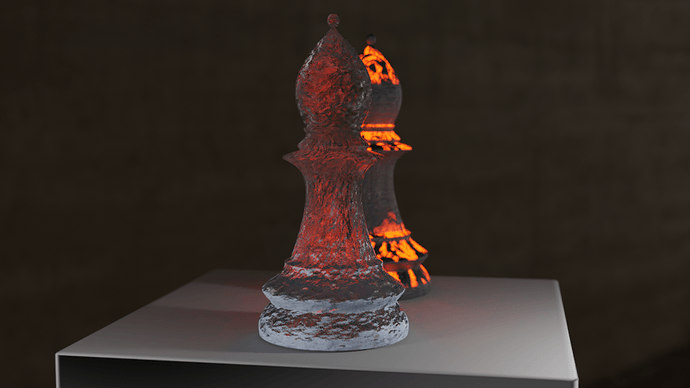

Quick job, but not as quick as I would have wanted it to be.

I added to top UV sphere and cut off its bottom, leaving an edge ring to connect to. For some reason I cannot explain, it took much more time to cut off the tip of the modified low-poly pawn. I used Ctrl-R to get a new loop, but I could not place it very close to the tip.

I am also disappointed by the workflow when working with a reference image:

-

Z-wireframe

-

Select vertices/edges/faces

-

Z-textured to be able to see the reference again

-

Do your operation

Many times I used loop selection to avoid that, but my window manager intercepts Alt, so I only have Shift-Alt Left btn (modify selection) working. Is there really no way to force textured view of just the image when the rest is in solid view ?

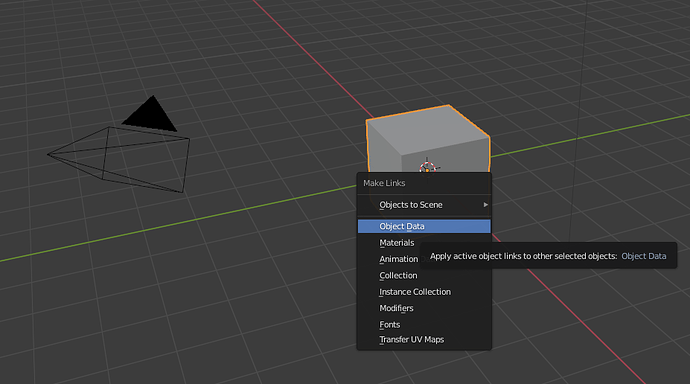

To end on a better note, I studied the Object / Object Data link for materials and I found it quite easy to share the mesh between the white and the black bishops. Unfortunately, applying object level transforms to the mesh no longer works. The workflow is impacted since my workaround is to make the mesh single-user, make changes, then update all the other references to use the new mesh. When all the other is just the black bishop, it is OK, but if a large model had hundreds of copies of an object (for instance identical doors in a large building painted in various colours), it would not be practical.

I imagine that scaling in Edit mode and thereby avoiding the Apply step would have worked.