What NP5 wrote is correct. Let me expand a bit more.

Q1) For 30xx (and 20xx too) series cards OptiX should give you better performance as it is using dedicated raytracing hardware on those cards. The difference in my case isn’t huge, but every second multiplied by many renders a year one makes adds up :-). As a interesting fact, it worked also on older cards, but on my old 1080ti the performance improvement was very dependent on what I was rendering and quite small so after doing some tests I didn’t bother with it.

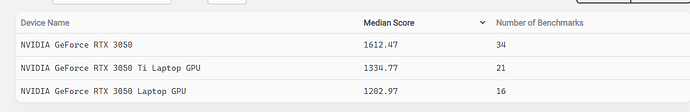

There’s an official benchmarking site for Blender (crowdsourced data) that shows that you should have big gains with OptiX (higher scores are better)

OptiX:

CUDA:

Q2. Unless your hardware is faulty (which will break no matter what you do with it) or used in bad conditions (huge amount of dust clogging GPU fans, unstable electricity with faulty or cheap PSU, etc.) , you are safe.

For additional (anecdotal) reassurance I still have 1080ti in my old desktop from 2018 which works fine to this day and I’ve used to run render jobs that could take many days on it frequently

And as a side note about crypto mining - rendering uses more parts of GPU than mining, esp. with OptiX and is less optimal as temperature fluctuate (gaming is even worse in that regard). But GPUs are (and have been for quite some time) designed with constant heavy load in mind, so nothing to worry about. And there are multiple levels of failsafes built in (e.g., when VRAM on your GPU will start overheating, at around 10C less than factory certified number it will simply start to throttle / slow down).

So no worries - render away to your heart’s content! And with 3050 you will have a great times doing it!

Edit: added link to blender benchmarking site